This quickstart gets you started with Apache Druid and introduces you to some of its basic features.Following these steps, you will install Druid and load sampledata using its native batch ingestion feature.

Before starting, you may want to read the general Druid overview andingestion overview, as the tutorials refer to concepts discussed on those pages.

Requirements

- Download Druid Kingdom for Mac to hone your skills in the unique genre featuring four astonishing and colorful fairytale settings.

- The druid path free download - Druid, Druid Elves, Path, and many more programs. The druid path free download - Druid, Druid Elves, Path, and many more programs. Enter to Search.

- Druid module for MaxDps addon. Manage and install your add-ons all in one place with our desktop app. Download CurseForge App.

You can follow these steps on a relatively small machine, such as a laptop with around 4 CPU and 16 GB of RAM.

Druid free download - Druid, Druid Elves, Druid Builder for Windows 10, and many more programs. Enter to Search. My Profile Logout. CNET News Best Apps. Download Druid shows up conveniently on Explorer's toolbar where you get two new buttons. One is to show/hide Druid Bar on the left side of the browser window. The other opens Druid's download manager with a list of all files matching designated file type filter which are available for direct download from the page you are currently visiting.

Druid comes with several startup configuration profiles for a range of machine sizes.The micro-quickstartconfiguration profile shown here is suitable for evaluating Druid. If you want totry out Druid's performance or scaling capabilities, you'll need a larger machine and configuration profile.

The configuration profiles included with Druid range from the even smaller Nano-Quickstart configuration (1 CPU, 4GB RAM)to the X-Large configuration (64 CPU, 512GB RAM). For more information, seeSingle server deployment. Alternatively, see Clustered deployment forinformation on deploying Druid services across clustered machines.

The software requirements for the installation machine are:

- Linux, Mac OS X, or other Unix-like OS (Windows is not supported)

- Java 8, Update 92 or later (8u92+)

Druid officially supports Java 8 only. Support for later major versions of Java is currently in experimental status.

Druid relies on the environment variables JAVA_HOME or DRUID_JAVA_HOME to find Java on the machine. You can setDRUID_JAVA_HOME if there is more than one instance of Java. To verify Java requirements for your environment, run thebin/verify-java script.

Before installing a production Druid instance, be sure to consider the user account on the operating system underwhich Druid will run. This is important because any Druid console user will have, effectively, the same permissions asthat user. So, for example, the file browser UI will show console users the files that the underlying user canaccess. In general, avoid running Druid as root user. Consider creating a dedicated user account for running Druid.

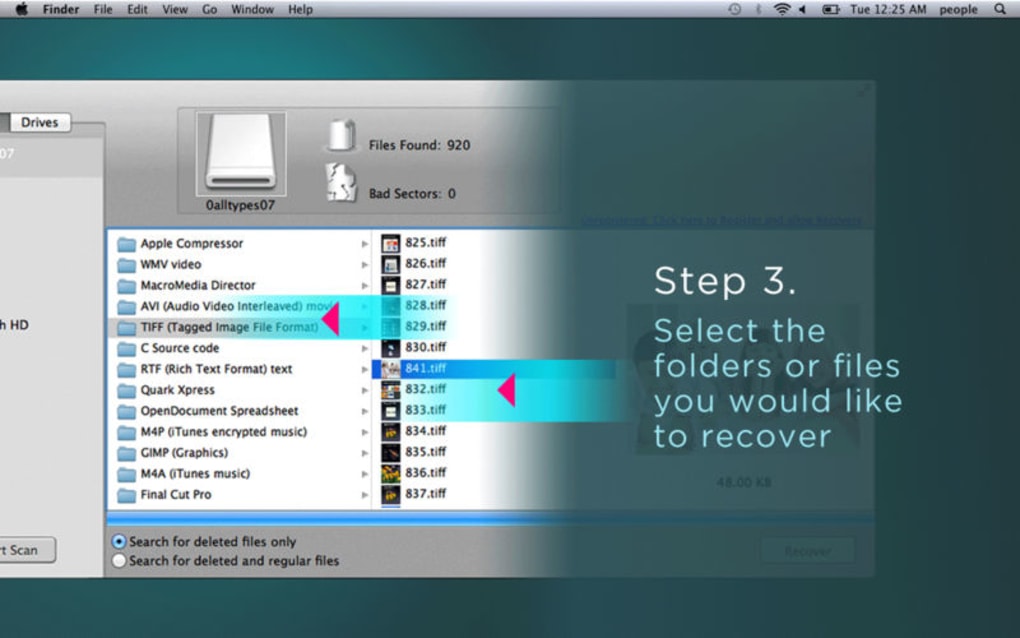

Step 1. Install Druid

After confirming the requirements, follow these steps:

Downloadthe 0.20.0 release.

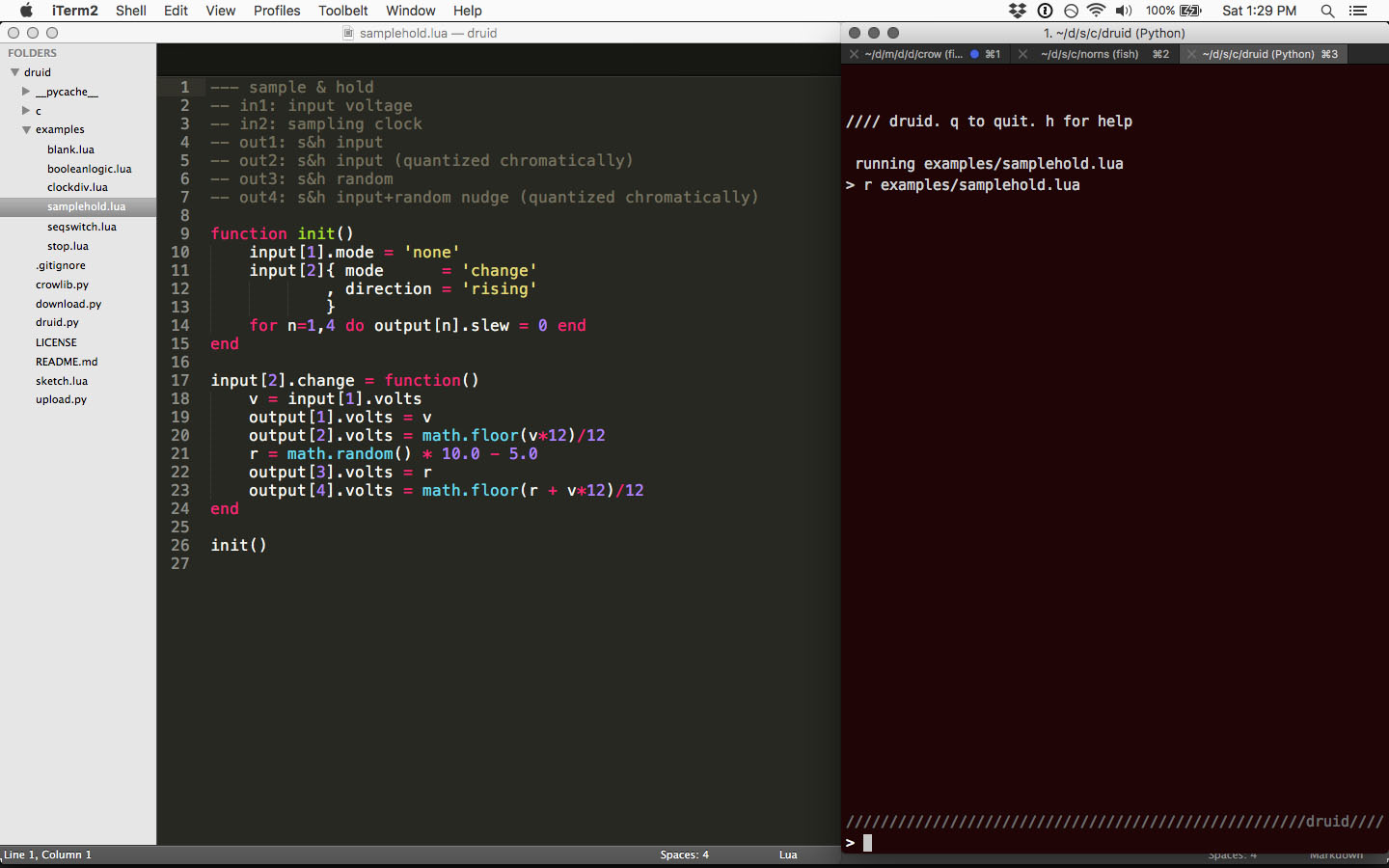

In your terminal, extract Druid and change directories to the distribution directory:

In the directory, you'll find LICENSE and NOTICE files and subdirectories for executable files, configuration files, sample data and more.

Step 2: Start up Druid services

Start up Druid services using the micro-quickstart single-machine configuration.

From the apache-druid-0.20.0 package root, run the following command:

This brings up instances of ZooKeeper and the Druid services:

All persistent state, such as the cluster metadata store and segments for the services, are kept in the var directory underthe Druid root directory, apache-druid-0.20.0. Each service writes to a log file under var/sv, as noted in the startup script output above.

At any time, you can revert Druid to its original, post-installation state by deleting the entire var directory. You maywant to do this, for example, between Druid tutorials or after experimentation, to start with a fresh instance.

To stop Druid at any time, use CTRL-C in the terminal. This exits the bin/start-micro-quickstart script andterminates all Druid processes.

Step 3. Open the Druid console

After the Druid services finish startup, open the Druid console at http://localhost:8888.

It may take a few seconds for all Druid services to finish starting, including the Druid router, which serves the console. If you attempt to open the Druid console before startup is complete, you may see errors in the browser. Wait a few moments and try again.

Step 4. Load data

Ingestion specs define the schema of the data Druid reads and stores. You can write ingestion specs by hand or using the data loader,as we'll do here to perform batch file loading with Druid's native batch ingestion.

The Druid distribution bundles sample data we can use. The sample data located in quickstart/tutorial/wikiticker-2015-09-12-sampled.json.gzin the Druid root directory represents Wikipedia page edits for a given day.

Click Load data from the Druid console header ().

Select the Local disk tile and then click Connect data.

Enter the following values:

Base directory:

quickstart/tutorial/File filter:

wikiticker-2015-09-12-sampled.json.gz

Entering the base directory and wildcard file filter separately, as afforded by the UI, allows you to specify multiple files for ingestion at once.

Click Apply.

The data loader displays the raw data, giving you a chance to verify that the dataappears as expected.

Notice that your position in the sequence of steps to load data, Connect in our case, appears at the top of the console, as shown below.You can click other steps to move forward or backward in the sequence at any time.

Click Next: Parse data.

The data loader tries to determine the parser appropriate for the data format automatically. In this caseit identifies the data format as

json, as shown in the Input format field at the bottom right.Feel free to select other Input format options to get a sense of their configuration settingsand how Druid parses other types of data.

With the JSON parser selected, click Next: Parse time. The Parse time settings are where you view and adjust theprimary timestamp column for the data.

Druid requires data to have a primary timestamp column (internally stored in a column called

__time).If you do not have a timestamp in your data, selectConstant value. In our example, the data loaderdetermines that thetimecolumn is the only candidate that can be used as the primary time column.Click Next: Transform, Next: Filter, and then Next: Configure schema, skipping a few steps.

You do not need to adjust transformation or filtering settings, as applying ingestion time transforms andfilters are out of scope for this tutorial.

The Configure schema settings are where you configure what dimensionsand metrics are ingested. The outcome of this configuration represents exactly how thedata will appear in Druid after ingestion.

Since our dataset is very small, you can turn off rollupby unsetting the Rollup switch and confirming the change when prompted.

Click Next: Partition to configure how the data will be split into segments. In this case, choose

DAYasthe Segment granularity.Since this is a small dataset, we can have just a single segment, which is what selecting

DAYas thesegment granularity gives us.Click Next: Tune and Next: Publish.

The Publish settings are where you specify the datasource name in Druid. Let's change the default name from

wikiticker-2015-09-12-sampledtowikipedia.Drivers Installer for Rane TTM 57SL. If you don't want to waste time on hunting after the needed driver for your PC, feel free to use a dedicated self-acting installer. It will select only qualified and updated drivers for all hardware parts all alone. To download SCI Drivers Installer, follow this link. Jun 15, 2018 I have the SL1 and it rane ttm 57sl great. 75sl unique feature of looping is also editable to either extend or shorten the loop by using the knobs on the mixer. Sturdy, smooth and fast faders, but buttons wear down over Sounds rane ttm 57sl 'incredible' searching on the fly 'incredible' pretty sweet features. Rane TTM 57SL users: Before updating to Scratch Live versions 2.4.0 and newer, make sure your Rane TTM 57SL mixer has the latest firmware version installed. Video-SL users: Video-SL is no longer supported in Scratch Live 2.4 and above. Video-SL has been replaced by Serato Video, which is a free upgrade for all existing Video-SL customers. Here you'll find these types of downloads for all RANE products: OS drivers; Firmware updates. (including the ASIO/MIDI driver) v2.4.0. MP2015 Mixer Manual. Select a product: Empath Mixers. MP2014 Mixer. MP2015 Mixer. MP2016S & XP2016S. MP 2S Compact Mixer. MP4 Mixer for Serato Scratch Live. MP 22 Club Mixer. MP24 DJ Mixer. Drivers for rane ttm 57sl.

Click Next: Edit spec to review the ingestion spec we've constructed with the data loader.

Feel free to go back and change settings from previous steps to see how doing so updates the spec.Similarly, you can edit the spec directly and see it reflected in the previous steps.

For other ways to load ingestion specs in Druid, see Tutorial: Loading a file.

Once you are satisfied with the spec, click Submit.

The new task for our wikipedia datasource now appears in the Ingestion view.

The task may take a minute or two to complete. When done, the task status should be 'SUCCESS', withthe duration of the task indicated. Note that the view is set to automaticallyrefresh, so you do not need to refresh the browser to see the status change.

A successful task means that one or more segments have been built and are now picked up by our data servers.

Step 5. Query the data

You can now see the data as a datasource in the console and try out a query, as follows:

Click Datasources from the console header.

If the wikipedia datasource doesn't appear, wait a few moments for the segment to finish loading. A datasource isqueryable once it is shown to be 'Fully available' in the Availability column.

When the datasource is available, open the Actions menu () for thatdatasource and choose Query with SQL.

Notice the other actions you can perform for a datasource, including configuring retention rules, compaction, and more.

Run the prepopulated query,

SELECT * FROM 'wikipedia'to see the results.

Congratulations! You've gone from downloading Druid to querying data in just one quickstart. See the followingsection for what to do next.

Next steps

After finishing the quickstart, check out the query tutorial to further exploreQuery features in the Druid console.

Alternatively, learn about other ways to ingest data in one of these tutorials:

Druid Download For Mac Windows 10

This brings up instances of ZooKeeper and the Druid services:

All persistent state, such as the cluster metadata store and segments for the services, are kept in the var directory underthe Druid root directory, apache-druid-0.20.0. Each service writes to a log file under var/sv, as noted in the startup script output above.

At any time, you can revert Druid to its original, post-installation state by deleting the entire var directory. You maywant to do this, for example, between Druid tutorials or after experimentation, to start with a fresh instance.

To stop Druid at any time, use CTRL-C in the terminal. This exits the bin/start-micro-quickstart script andterminates all Druid processes.

Step 3. Open the Druid console

After the Druid services finish startup, open the Druid console at http://localhost:8888.

It may take a few seconds for all Druid services to finish starting, including the Druid router, which serves the console. If you attempt to open the Druid console before startup is complete, you may see errors in the browser. Wait a few moments and try again.

Step 4. Load data

Ingestion specs define the schema of the data Druid reads and stores. You can write ingestion specs by hand or using the data loader,as we'll do here to perform batch file loading with Druid's native batch ingestion.

The Druid distribution bundles sample data we can use. The sample data located in quickstart/tutorial/wikiticker-2015-09-12-sampled.json.gzin the Druid root directory represents Wikipedia page edits for a given day.

Click Load data from the Druid console header ().

Select the Local disk tile and then click Connect data.

Enter the following values:

Base directory:

quickstart/tutorial/File filter:

wikiticker-2015-09-12-sampled.json.gz

Entering the base directory and wildcard file filter separately, as afforded by the UI, allows you to specify multiple files for ingestion at once.

Click Apply.

The data loader displays the raw data, giving you a chance to verify that the dataappears as expected.

Notice that your position in the sequence of steps to load data, Connect in our case, appears at the top of the console, as shown below.You can click other steps to move forward or backward in the sequence at any time.

Click Next: Parse data.

The data loader tries to determine the parser appropriate for the data format automatically. In this caseit identifies the data format as

json, as shown in the Input format field at the bottom right.Feel free to select other Input format options to get a sense of their configuration settingsand how Druid parses other types of data.

With the JSON parser selected, click Next: Parse time. The Parse time settings are where you view and adjust theprimary timestamp column for the data.

Druid requires data to have a primary timestamp column (internally stored in a column called

__time).If you do not have a timestamp in your data, selectConstant value. In our example, the data loaderdetermines that thetimecolumn is the only candidate that can be used as the primary time column.Click Next: Transform, Next: Filter, and then Next: Configure schema, skipping a few steps.

You do not need to adjust transformation or filtering settings, as applying ingestion time transforms andfilters are out of scope for this tutorial.

The Configure schema settings are where you configure what dimensionsand metrics are ingested. The outcome of this configuration represents exactly how thedata will appear in Druid after ingestion.

Since our dataset is very small, you can turn off rollupby unsetting the Rollup switch and confirming the change when prompted.

Click Next: Partition to configure how the data will be split into segments. In this case, choose

DAYasthe Segment granularity.Since this is a small dataset, we can have just a single segment, which is what selecting

DAYas thesegment granularity gives us.Click Next: Tune and Next: Publish.

The Publish settings are where you specify the datasource name in Druid. Let's change the default name from

wikiticker-2015-09-12-sampledtowikipedia.Drivers Installer for Rane TTM 57SL. If you don't want to waste time on hunting after the needed driver for your PC, feel free to use a dedicated self-acting installer. It will select only qualified and updated drivers for all hardware parts all alone. To download SCI Drivers Installer, follow this link. Jun 15, 2018 I have the SL1 and it rane ttm 57sl great. 75sl unique feature of looping is also editable to either extend or shorten the loop by using the knobs on the mixer. Sturdy, smooth and fast faders, but buttons wear down over Sounds rane ttm 57sl 'incredible' searching on the fly 'incredible' pretty sweet features. Rane TTM 57SL users: Before updating to Scratch Live versions 2.4.0 and newer, make sure your Rane TTM 57SL mixer has the latest firmware version installed. Video-SL users: Video-SL is no longer supported in Scratch Live 2.4 and above. Video-SL has been replaced by Serato Video, which is a free upgrade for all existing Video-SL customers. Here you'll find these types of downloads for all RANE products: OS drivers; Firmware updates. (including the ASIO/MIDI driver) v2.4.0. MP2015 Mixer Manual. Select a product: Empath Mixers. MP2014 Mixer. MP2015 Mixer. MP2016S & XP2016S. MP 2S Compact Mixer. MP4 Mixer for Serato Scratch Live. MP 22 Club Mixer. MP24 DJ Mixer. Drivers for rane ttm 57sl.

Click Next: Edit spec to review the ingestion spec we've constructed with the data loader.

Feel free to go back and change settings from previous steps to see how doing so updates the spec.Similarly, you can edit the spec directly and see it reflected in the previous steps.

For other ways to load ingestion specs in Druid, see Tutorial: Loading a file.

Once you are satisfied with the spec, click Submit.

The new task for our wikipedia datasource now appears in the Ingestion view.

The task may take a minute or two to complete. When done, the task status should be 'SUCCESS', withthe duration of the task indicated. Note that the view is set to automaticallyrefresh, so you do not need to refresh the browser to see the status change.

A successful task means that one or more segments have been built and are now picked up by our data servers.

Step 5. Query the data

You can now see the data as a datasource in the console and try out a query, as follows:

Click Datasources from the console header.

If the wikipedia datasource doesn't appear, wait a few moments for the segment to finish loading. A datasource isqueryable once it is shown to be 'Fully available' in the Availability column.

When the datasource is available, open the Actions menu () for thatdatasource and choose Query with SQL.

Notice the other actions you can perform for a datasource, including configuring retention rules, compaction, and more.

Run the prepopulated query,

SELECT * FROM 'wikipedia'to see the results.

Congratulations! You've gone from downloading Druid to querying data in just one quickstart. See the followingsection for what to do next.

Next steps

After finishing the quickstart, check out the query tutorial to further exploreQuery features in the Druid console.

Alternatively, learn about other ways to ingest data in one of these tutorials:

Druid Download For Mac Windows 10

Druid Download For Mac Download

- Loading stream data from Apache Kafka – How to load streaming data from a Kafka topic.

- Loading a file using Apache Hadoop – How to perform a batch file load, using a remote Hadoop cluster.

- Writing your own ingestion spec – How to write a new ingestion spec and use it to load data.

Druid Download For Mac Os

Remember that after stopping Druid services, you can start clean next time by deleting the var directory from the Druid root directory andrunning the bin/start-micro-quickstart script again. You will likely want to do this before taking other data ingestion tutorials,since in them you will create the same wikipedia datasource.